Photo by Volodymyr Hryshchenko on Unsplash

Breaking Down the Misconceptions: The Truth About Chat GPT's Understanding of Language

Uncovering the Limits of Chat GPT: Why It Can't Fully Understand the Meaning Behind Your Words

In the age of technology, chatbots have become increasingly popular as a means of communication between humans and machines. Among the most sophisticated chatbots is Chat GPT, a language model developed by OpenAI that uses deep learning to generate human-like responses to text inputs. While Chat GPT has been praised for its impressive language processing capabilities, it is important to understand that it is not perfect.

In fact, Chat GPT cannot truly understand the meaning of the text we are saying💥, YES that's true!!

There is a philosophy called The Chinese Room. The Chinese Room argument was first proposed by philosopher John Searle in 1980 as a counter-argument to the claim that a computer program could understand language and engage in intelligent conversation.

• The argument involves a thought experiment in which a person who does not speak or understand Chinese is placed in a room with a set of instructions in English for manipulating Chinese characters.

• The person follows the instructions and produces responses to questions posed in Chinese.

• Without actually understanding the language or the meaning of the questions and answers.

Searle's argument is that the computer program, like the person in the Chinese Room, can manipulate symbols according to rules without understanding their meaning. Therefore, the program does not truly understand the language but rather is simply processing information according to pre-determined rules. I read this philosophy somewhere on Twitter or LinkedIn.

To understand this better, let's consider an example. Suppose you ask Chat GPT the question, "What is the meaning of life?" Chat GPT may generate a response based on its programming and training data, such as "The meaning of life is subjective and can differ from person to person." However, this response is not based on a true understanding of the concept of meaning, nor does it reflect Chat GPT's own beliefs or values. Rather, the response is based on statistical patterns in the data that it has been trained on, and does not involve genuine comprehension or interpretation of the question.

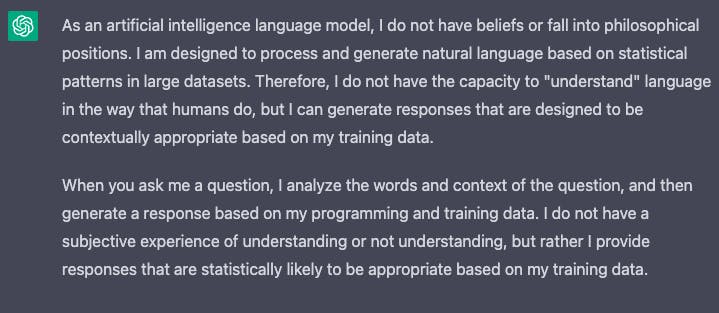

Then a thought came to my mind. Let's as Chat GPT itself whether it believes this philosophy or not. The reply blew my mind 🤯.

In conclusion, while Chat GPT is a powerful tool for generating human-like responses to text inputs, it cannot truly understand the meaning of the text we are saying. The Chinese Room argument and a closer examination of the limitations of language processing in machines reveal the complexity of language and the importance of human interpretation and communication.

As we continue to develop and use language models like Chat GPT, it is important to recognize their limitations and use them in ways that are ethical and responsible.

Comment down what are your views on this. I also welcome constructive criticism and alternative viewpoints. If you have any thoughts or feedback on our analysis, please feel free to share them in the comments section below.

Thank you for reading 😁.

For more such content make sure to subscribe to my Newsletter here

Follow me on