Phi-2 has an MIT license now! Why not perform RAG using it?

SLMs (small language models ) are not really small!

Yesterday I read a few posts about Microsoft Phi-2 LLM's license changed to MIT. What so fuss around it though?

The old license was like "You are allowed to conduct testing locally; however, for production purposes, it is essential to execute the application on Azure (Microsoft's cloud platform)"

So if you don't know the MIT License (Massachusetts Institute of Technology License) is a permissive open-source license. The MIT License allows for free use, modification, distribution, and sub-licensing of the software. It's considered one of the most permissive open-source licenses. (It means you can use it commercially 🤑)

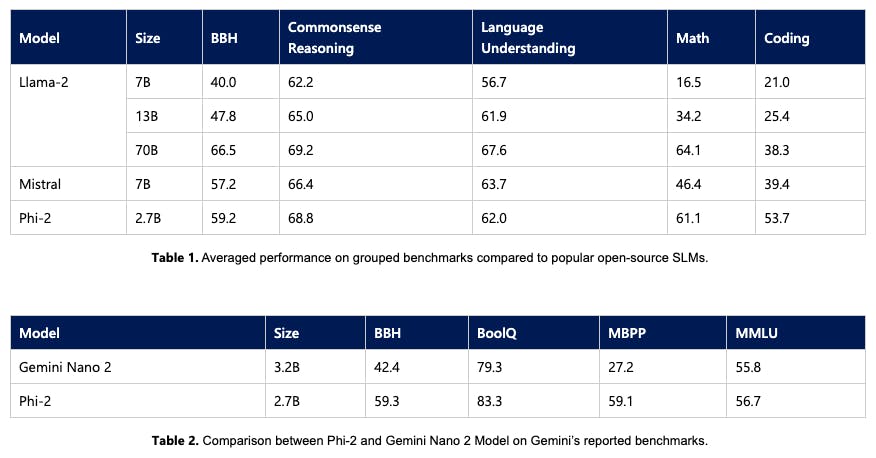

Phi-2 is a 2.7 billion parameter LLM trained on 1.4T tokens, including synthetic data, achieving 56.7 on MMLU, outperforming Google Gemini Nano. It was built by the Microsoft research team.

MMLU: It assesses the model's proficiency in comprehending and generating language across diverse contexts such as machine translation, text summarization, and sentiment analysis. The ultimate MMLU score is derived by averaging the scores achieved in each task, offering a comprehensive evaluation of the model's overall performance. Learn more about MMLU here.

Some people are calling it LLM but it's actually a SLM(Small Language Model). As far I as remember I read in one article that A Language model is called a Large Language Model only if has more than 6B parameters.

Phi-2's synthetic training corpus has undergone augmentation with carefully curated web data. Microsoft adopted a dual-source methodology, attributing the model's robustness and competence to this approach. The complete training dataset comprises 250 billion tokens. While Microsoft has not made the data public, some sources have NLP synthesized with GPT-3.5 and others filtered web data from Falcon RefinedWeb and SlimPajama, evaluated by GPT-4.

It is really fascinating to see such a small model outperforms LLMs in most of the tasks. Here is the official article.

What are the Benefits of using small models?

You can finetune it quickly ⌛️

Can be run locally 💻

Requires less computation: Usually running 7B or 13B models takes around 12-14GB of GPU.

How to run Phi-2 on Google Colab?

I will show two ways by which you can use Microsoft's Phi-2.

Normal way

RAG(Retrieval Augmentation Generation) using Llama-index

Normal way

The normal way is using Huggingface's code and doing inference directly on Phi-2 without RAG or any other tuning. I am using Google Colab with T4 runtime type.

I have for you here the model card, read more about Phi-2.

Install required packages

!pip install langchain torch transformers sentencepiece accelerate bitsandbytes einops sentence-transformers

langchain - LangChain is a framework for developing applications powered by language models.

transformers - provides APIs and tools to easily download and train state-of-the-art pretrained models.

sentencepiece - Tokenizer

accelerate - Accelerate is a library that enables the same PyTorch code to be run across any distributed configuration

bitsandbytes - The bitsandbytes is a lightweight wrapper around CUDA custom functions

einops - Flexible and powerful tensor operations for readable and reliable code.

from transformers import AutoTokenizer,pipeline, AutoModelForCausalLM

from langchain import HuggingFacePipeline

import transformers

import torch

# Get model's tokenizer

tokenizer = AutoTokenizer.from_pretrained(

"microsoft/phi-2", #path of model

trust_remote_code = True

)

Get a model, this will take a few minutes. This base model has 2 safetensors models.

model = AutoModelForCausalLM.from_pretrained(

"microsoft/phi-2",

torch_dtype = "auto",

device_map = "auto",

trust_remote_code = True

)

Create a pipeline

pipeline = transformers.pipeline(

"text-generation",

model=model,

tokenizer = tokenizer,

device_map="auto",

max_new_tokens=256, # You change this if you want longer output

temperature = 0.5 # controls the randomness of the generated output

)

from langchain import HuggingFacePipeline

llm = HuggingFacePipeline(

pipeline = pipeline,

)

pipeline.model.config.pad_token_id = pipeline.model.config.eos_token_id

Phi-2 follows this template

Instruction:<instruction>\n output:

from langchain.prompts import PromptTemplate

from langchain.chains.llm import LLMChain

task_template = """

You are a friendly chatbot assistant that gives structured output.

Your role is to arrange given task in this structure.

### instruction:

{instruction}

Output:

"""

task_prompt_template = PromptTemplate(

input_variables=["instruction"], template=task_template

)

#We are using LLMChain here, there are different types of chain provided

#by langchain

llm_chain = LLMChain(prompt=task_prompt_template,llm=llm)

#Query

question = "write short essay on India"

print(llm_chain.run(question))

This is how the output looks like

## Introduction

India is a vast and diverse country with a rich history, culture, and heritage. It is the seventh-largest country in the world by area and the second-most populous country with over 1.3 billion people. India has a long and complex history that spans thousands of years and involves various dynasties, empires, religions, and cultures. In this essay, I will discuss some of the major aspects of India's history, such as its ancient civilizations, its colonial and independence struggles, and its modern development and challenges.

## Ancient Civilizations

India has a long and illustrious history of ancient civilizations that flourished in different regions and periods. Some of the most prominent and influential civilizations are: - The Indus Valley Civilization: This was one of the earliest and most advanced civilizations in the world, dating back to around 2500 BCE. It was located in the northwestern part of India, along the Indus River and its tributaries. It had a sophisticated urban planning, a standardized writing system, a complex trade network, and a diverse culture. The Indus Valley Civilization declined around 1900 BCE, possibly due to environmental changes, invasions, or internal conflicts.

Rag using llama-index

Install all the required packages.

!pip install -q pypdf einops accelerate llama-index python-dotenv

pypdf - pypdf is a free and open source pure-python PDF library capable of splitting, merging, cropping, and transforming the pages of PDF files. To read the file llama-index uses pypdf

Llamaindex - LlamaIndex is a data framework for LLM-based applications to ingest, structure, and access private or domain-specific data.

Import all the necessary things

from llama_index import VectorStoreIndex, SimpleDirectoryReader, ServiceContext

from llama_index.llms import HuggingFaceLLM #to load the model

import torch

Here we are reading a file. SimpleDirectoryReader supports multiple file extensions.

#This line reads the entire directory. here it is reading all the files

# from Data folder

documents = SimpleDirectoryReader("/content/Data").load_data()

# If you want to load a specific file then you can do following

#documents = SimpleDirectoryReader(input_files=["/content/Data/file1.pdf"]).load_data()

Add a prompt template

from llama_index.prompts.prompts import SimpleInputPrompt

system_prompt = "You are a friendly Q&A assistant. Your goal is to answer questions as accurately as possible based on the given text. Return answer only do not write anything more than answer."

Phi-2 follows this template

Instruction:<instruction>\n output:

# This will wrap the default prompts that are internal to llama-index

query_wrapper_prompt = SimpleInputPrompt("Instruction: {query_str}\nOutput:")

Here we are defining an LLM and its parameters. You can play with these to get expected outcomes.

llm = HuggingFaceLLM(

context_window=4096, #input length

max_new_tokens=128, # output length

generate_kwargs={"temperature": 0.2, "do_sample": True},

system_prompt=system_prompt,

query_wrapper_prompt=query_wrapper_prompt,

tokenizer_name="microsoft/phi-2",

model_name="microsoft/phi-2",

device_map="auto",

model_kwargs={"torch_dtype": torch.bfloat16}

)

Now we have to create embeddings of the file that we will be using for RAG (Retrieval Augmented Generation)

from llama_index.embeddings import HuggingFaceEmbedding

embed_model = HuggingFaceEmbedding(model_name="BAAI/bge-small-en-v1.5")

Set service context. Its a part of llamaindex which is used for index creation of given file. I am using my resume for this example.

service_context = ServiceContext.from_defaults(

chunk_size=1024,

llm=llm,

embed_model=embed_model

)

#Chunking can be really good source to increase the LLM's precision.

#There various ways and types of chunking that helps.

Explore more about Chunking here.

Now we will generate index and using index query engine is created.

If you are familiar with Langchain then query engine is nothing but works like a Chain.

#You can use different VectoreDB as well to store Index.

index = VectorStoreIndex.from_documents(documents, service_context=service_context)

query_engine = index.as_query_engine()

Now lets test our RAG pipeline

response = query_engine.query("What is this document is about? ")

#print(response) will print some other text along with the actual response.

# To ignore metadata and stuff, use the following line

response.response

Here is the output:

This document is about Kaushal Powar, a Python developer from India. He has experience in NLP, ML, and Python. He is currently working as a Python developer Intern at ImaginorLabs. He has done a work as Research Intern at Wayne University in the US. He has also worked as a Student Ambassador at Streamlit. He has volunteered at the Pinecone Hackathon. He has also worked on projects like Talk to PDF and Rumi GPT.

Isn't this cool !! A Small Language Model(SML) with just 2.7 Billion parameters works this great. I will be doing some finetuning over this. In the next blog I will share How to finetune the Phi-2 model.

References

https://www.microsoft.com/en-us/research/blog/phi-2-the-surprising-power-of-small-language-models/

https://docs.llamaindex.ai/en/stable/

https://huggingface.co/microsoft/phi-2

Thank you for reading 😁.

If you like my work, you can support me here: Support my work

I do welcome constructive criticism and alternative viewpoints. If you have any thoughts or feedback on our analysis, please feel free to share them in the comments section below.

For more such content make sure to subscribe to my Newsletter here

Follow me on